68 - Long Text Generation

Generating long sequences of words, based on long sequences of words with appropriate performance.

There are limits to the input for text generation models. Most models have a limited length around 500-tokens long. This is due to the shortcomings of Recurrent Neural Networks (RNN), resulting in vanishing gradients for long sequences where long-term information has to sequentially travel through all cells before getting to the present processing cell.

Long ShortTerm Memory networks (LSTM) and Gated Recurrent Unit (GRU) require less computations and are better capable of learning and remembering over long sequences, but eventually they also don’t work either for very long sequences.

Vanishing gradients are better solved by using attention based models like Transformers that can parallelly process input in contrast to RNNs. Longformer is a model designed for long sequences. It has an attention mechanism that scales linearly with the input sequence length, compared to most self-attention based models that scale quadratically and therefor require more memory.

BigBird is another model from Google with a sparse attention mechanism that reduces the required computations and memory. BigBird handles inputs that are up to 8 times longer than the original BERT model could handle. Several NLP tasks will benefit from the handling of longer inputs: Long Document Summarization, Question Answering and Genomics Processing.

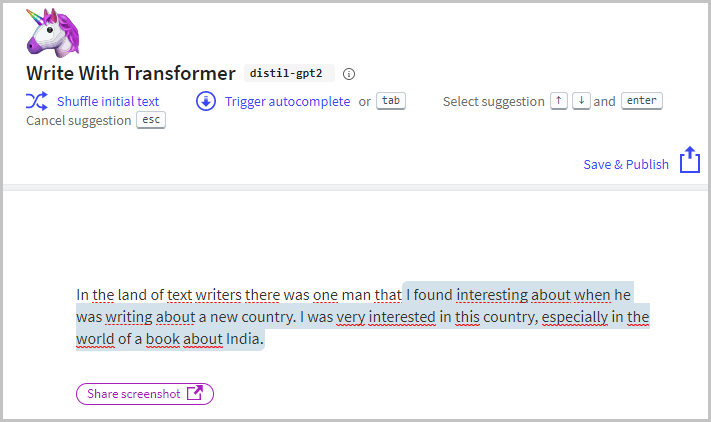

Want to autowrite yourself? Use the Write With Transformers demo to write text, based on an initial text.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.