61 - Distributed Word Representations

Multi-dimensional meaning representations of a word are reduced to a level of N dimensions, so the vectors can be used for similarity measures.

Distributed / Static Word Representations, or Word Vectors or Word Embeddings are multi-dimensional meaning representations of a word, which are reduced to a level of N dimensions. This technique received a lot of attention since 2013 when Google published the algorithm. There were still several challenges.

Original word embedding have one vector per word. A vector typically has 300 or 512 dimensions and 500k words for a large model. This results in embeddings which can grow over 500Mb, which have to be loaded into memory. To reduce this load one can use less dimensions, which makes the vectors less unique. Remove vectors for infrequent words, but these might be the most interesting words. Or map multiple words into one vector (pruning in spaCy), but these words will then be 100% similar to each other.

Encountering out-of-vocabulary words is the word embedding problem of having words for which no vector exists. Subword approaches try to solve the unknown word problem by assuming that you can reconstruct a word’s meaning from its parts.

Lexical ambiguity or polysemy is another problem. A word in a word embedding has no context, so the vector for the word ‘bank’ is trained on the semantic context of ‘river’ bank, but also for the ‘financial’ bank. Sense2vec solves this context-sensitivity partly by taking metainfo into account. The model is trained on words like ‘duck|NOUN’ and ‘duck|VERB’ or ‘Obama|PERSON’ and ‘Obama|ORG’ (e.g. the Obama administration) to be more distinctive on the metainfo-tag (but how about ‘foot’; body part vs scale unit). Nowadays the ambiguity problem is solved by Attention Based Contextualized Word Representations.

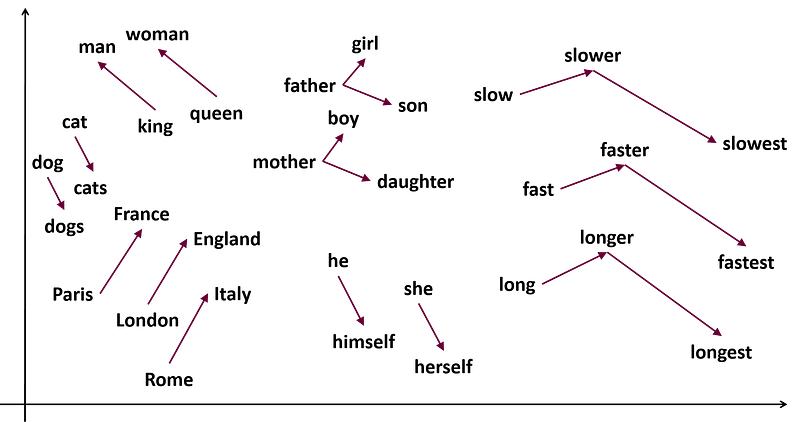

A triggering feature (in the early days) for word embeddings was that they contain semantic relations if the training corpus reflects this. An example is ‘Paris’ is to ‘France’ as ‘London’ is to […]. The embedding can respond with ‘England’. However, it’s not always accurate and deeplearning models are nowadays a better alternative to find these relations.

Best known word embedding models are:

- Word2Vec is the first wordvector algorithm created by Tomáš Mikolov at Google. It is best implemented by Gensim.

- GloVe algorithm is created by Stanford.

- fastText algorithm is created by Facebook and is a subword embedding where each word is represented as a bag of character n-grams. This means that out-of-vocabulary words can be composed from multiple subwords. This makes the algorithm faster, because the embedding is smaller. Trained word vectors for 157 languages are available to download.

- BPEmb is also a subword embedding algorithm. Subwords are based on Byte-Pair Encoding (BPE) which is a specific type of subword tokenization. BPEmb has trained models for 275 languages.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.